The Curious Similarity Between LLMs and Quantum Mechanics

Follow me on X at @robleclerc

I began working with neural networks and evolutionary algorithms in 1999, particularly within the field of Artificial Life (ALife). Back then, the most vexing question was how can digital systems achieve open-ended evolution—evolving systems to higher and higher levels of complexity without stagnation or running in circles.

It’s been about 16 years now since I left Academia, but about six months ago, I decided to take a dive to get up to speed on the transformer architecture to see what exactly made it so powerful and I kept noticing something strange: many of the concepts behind these models strangely mirrored many features and phenomena from quantum mechanics. This got me thinking, are transformers proving to be so powerful because they’ve inadvertently captured key design principles of nature, giving them their open-endedness?

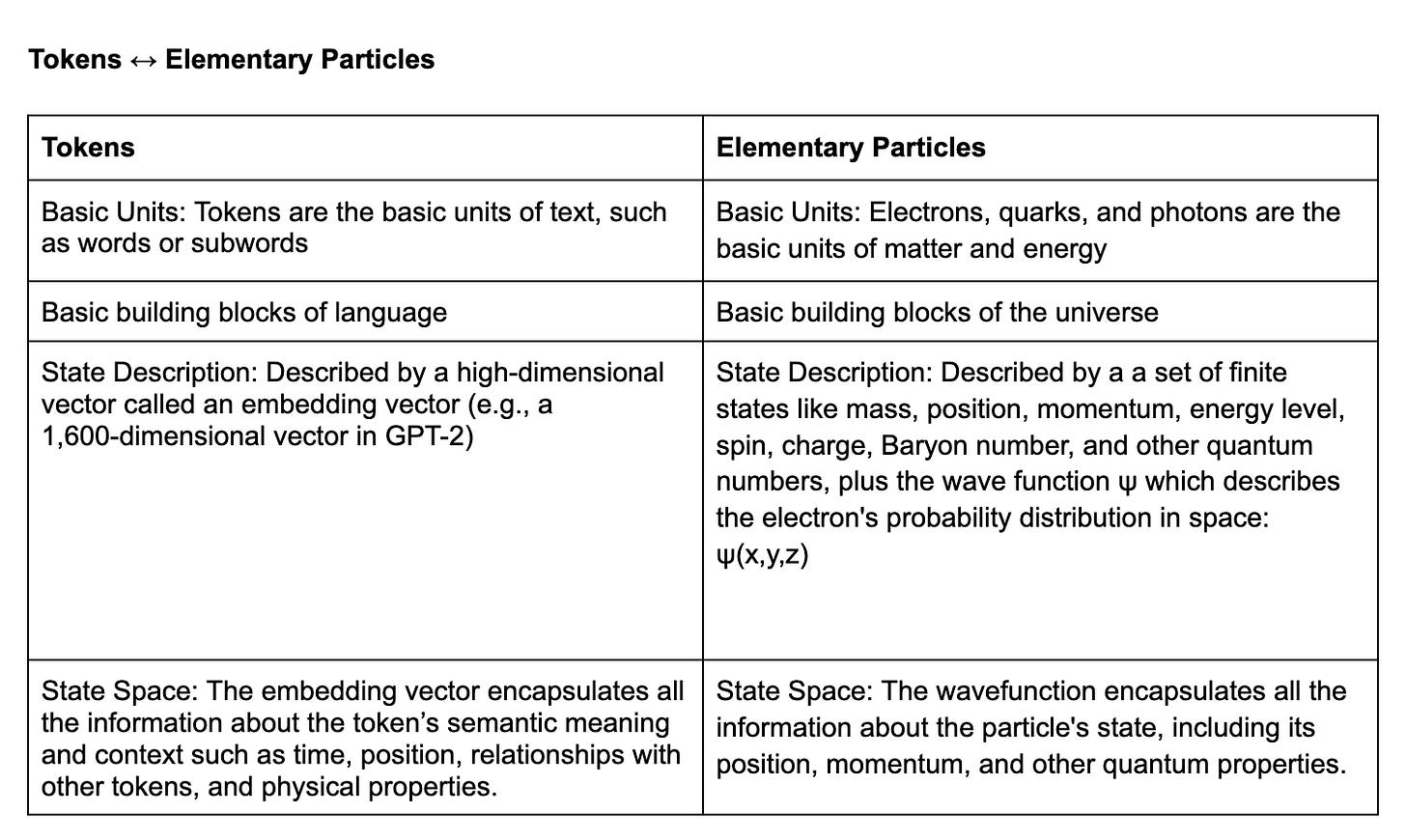

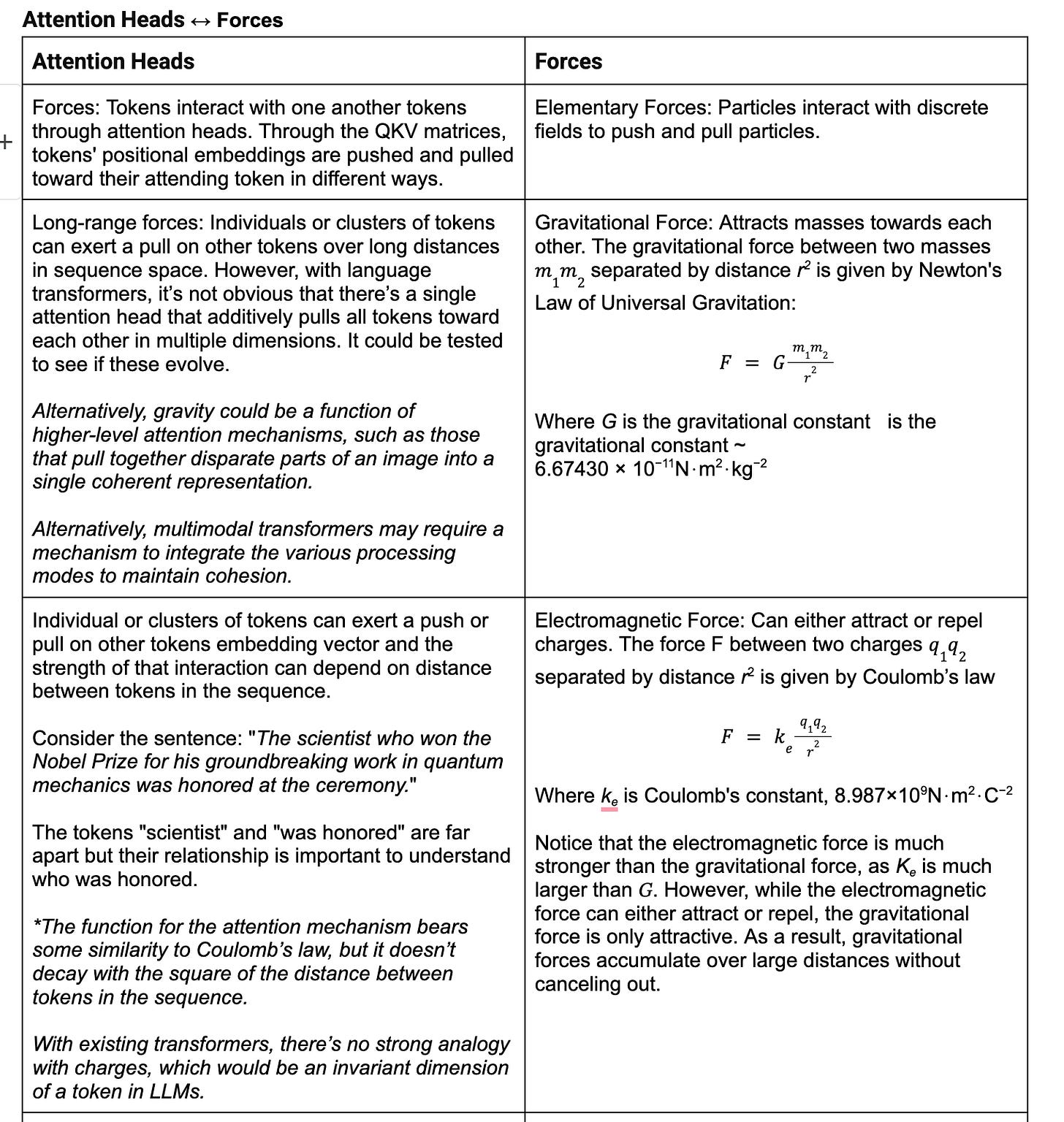

Take tokens, for example. Before context clarifies their meaning, they linger in a kind of semantic superposition—like particles existing in multiple states at once. Similarly, self-attention heads bind words across sentences like quantum entanglement, where “he” in one paragraph instantly locks onto “Bob” in another, no matter the distance. Even embedding vectors, those high-dimensional containers of meaning, behave like probability waves that collapse into definite interpretations.

These parallels aren’t perfect (and I’m not claiming transformers literally run on quantum rules). But the overlap feels too coincidental to ignore. Below, I’ve tried to map out the analogies side-by-side (screenshots of tables, because Substack is annoyingly allergic to table formatting). I’d love to hear your thoughts.

I was just writing up a little 'for fun' paper for the end of a Stanford continuing education class on LLMs with this intro. I was trying to keep it even a bit more generic to help capture the imagination but the metaphorical similarities are striking here...something about how we encode high complexity in a process feels shared.

"Probabilities, Interference, Resonance, Uncertainty: AI Complexity Metaphors a Century after Quantum Mechanics

If the rise of compelling but probabilistic language models, built on the complex resonance (attention) effects across a vast corpus of text, is surprising, you may want to examine the scientific toolbox behind the last century's most revolutionary breakthrough: Quantum Mechanics.

Imagine a planet unexplainably leaving our Solar system; this was the emotion of our observation of α-decay under classical mechanics. Spontaneous radiation could only be explained with probabilistic physics. Traditional equations didn’t allow a bundle of protons and neutrons to escape the nuclear force, but in new Radium experiments, they did. In a breakthrough paradigm shift, scientists modeled the complex and counterintuitive behaviors of α-decay with the probability amplitudes of Quantum Mechanics. The new approach used a toolkit that featured probabilistic amplitude wave equations and allowed an elementary particle bundle to ‘tunnel’ through a barrier when the interference patterns of those waves were just right– expelling it from the nucleus randomly.

A century later, large language models demonstrated another paradigm-changing approach to providing Turing Test compatible results and standardized test-passing responses across much of written human understanding. In a metaphorically similar fashion, they achieved these results not by layering a rules based approach from explicit first principles and definitions, but rather by building probability curves based off of calculating the deeper interference, both constructive and destructive, of words (tokens) have on each other even at significant distance in a text.

The comparison here is conceptual rather than mathematically isomorphic. The universal function approximation principle of neural networks depends on non-linearities (ReLu, softmax) being introduced in the hidden and output layers. This is unlike the linear nature of the solutions to the Schrödinger equation for wave functions. But there are also intersting metaphorical similarities to explore. When a photon travels through a double-slit its probability of being detected at a on a wall must now calculate constructive and destructive interference of both paths. Language and meaning also change as we add terms which may constructively or destructively interfere. When we write the word “Dog” it a language model must clearly calculate new probabilities based on the prior word – imagine “hot,” “brown” or “missing” as an example."

....was googling for examples of this when I found your piece here in the writing. Thanks for capturing this as well! You have taken the comparison a layer deeper than I risked going in an AI class discussion.

One of my primary interests is the recognition and study of patterns, including the type you're describing here. Yes, I believe your inquiry is worthwhile--it's likely you're "on to something." Please continue to pursue these ideas.